All three currently available Llama 2 model sizes 7B 13B 70B are trained on 2 trillion tokens and have. 4 rows Meta developed and publicly released the Llama 2 family of large language models LLMs a. The Llama2 7B model on huggingface meta-llamaLlama-2-7b has a pytorch pth. There are two common formats for LLaMA checkpoints The original format provided by Meta and. The 7 billion parameter model LLaMA 2 7B is 126GB in size so it should download fairly quickly. Vocab_size 32000 hidden_size 4096 intermediate_size 11008 num_hidden_layers 32. Llama 2 - Meta AI This release includes model weights and starting code for pretrained and fine-tuned Llama. ..

We have collaborated with Vertex AI from Google Cloud to fully integrate Llama 2 offering pre-trained chat and CodeLlama in various sizes. There are many ways to set up Llama 2 locally Well discuss one of these ways that makes it easy to set up and start using Llama quickly. Understanding Llama 2 and Model Fine-Tuning Llama 2 is a collection of second-generation open-source LLMs from Meta that comes with a. This manual offers guidance and tools to assist in setting up Llama covering access to the model hosting. LLaMA 2 is the second generation of a fast and powerful artificial intelligence AI that Meta initially designed for research..

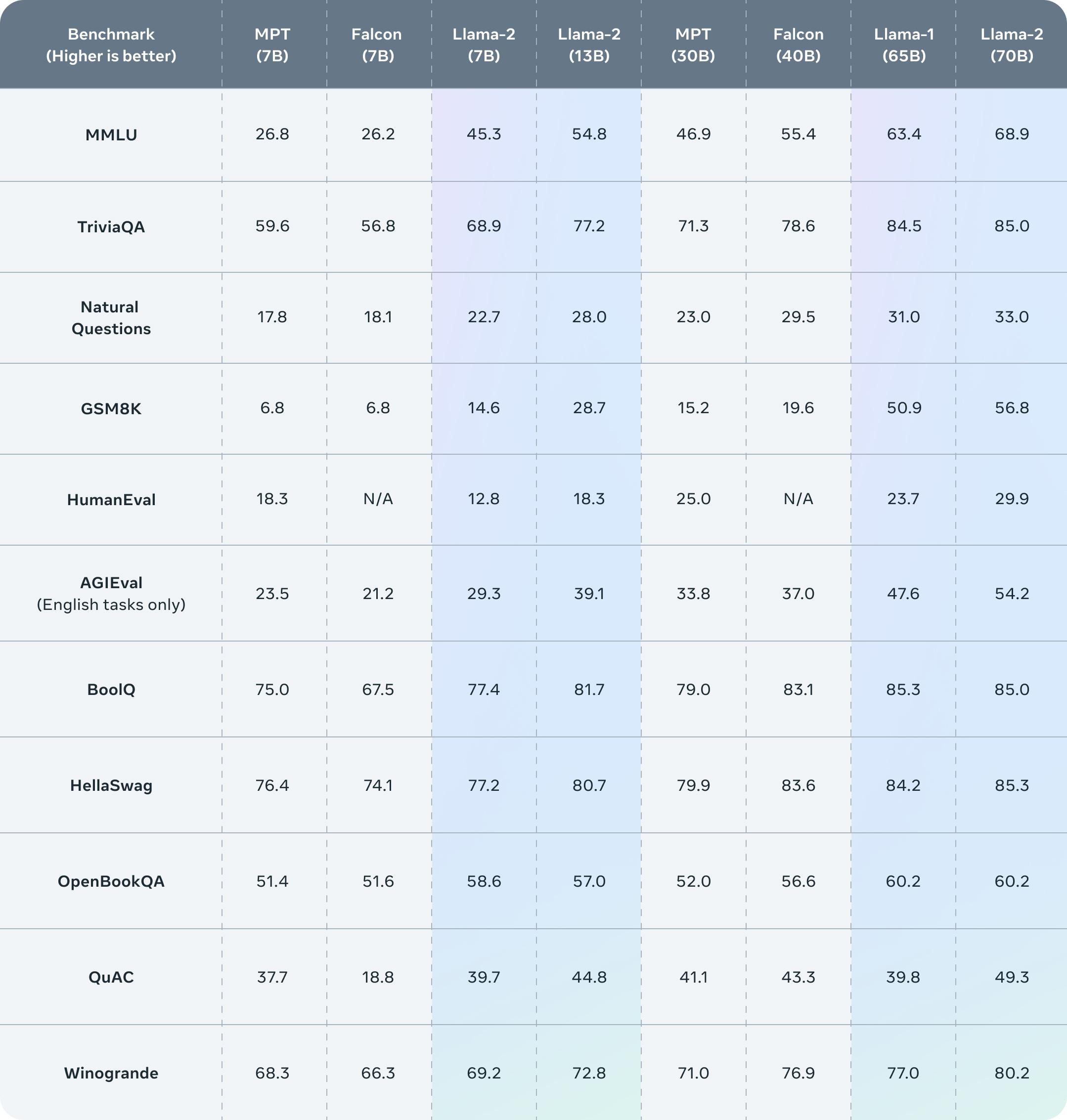

Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters. Llama 2 outperforms other open source language models on many external benchmarks including reasoning coding proficiency and knowledge tests. Llama 2 is being released with a very permissive community license and is available for commercial use. Meta built LLama Long on the foundation of OpenLLaMA and refined it using the Focused Transformer FoT method. Unlike the first gen each Llama-2 model has two versions A regular uncensored version and a chat-optimized aligned version..

. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters. Could not load Llama model from path Xxxxllama-2-7b-chatggmlv3q4_0bin Issue 438 PromtEngineerlocalGPT GitHub. We then ask the user to provide the Models Repository ID and the corresponding file name If not provided we use TheBlokeLlama-2-7B-chat. Opened this issue on Jul 19 2023 16 comments BOS and EOS are special beginning and end tokens..

Comments